Image generation has emerged as a pioneering field within Artificial Intelligence (AI), offering unprecedented opportunities across marketing, sales, and e-commerce domains. This fusion of AI and visual content creation signifies a significant milestone, ushering in a new era of digital communication and fundamentally altering how businesses engage with their audiences. As technology evolves, the gap between text and images gradually diminishes, unlocking a realm of creative potential.

In this rapidly evolving landscape, the Salesforce Research team introduces a groundbreaking innovation: XGen-Image-1. This remarkable leap in generative AI focuses specifically on transforming text into images. By harnessing the capabilities of image-generative diffusion models, XGen-Image-1 holds the potential to reshape the visual realm. A product of ingenuity and expertise, the model’s training—conducted on a budget of $75K using TPUs and the LAION dataset—represents a notable achievement. Its performance mirrors that of the esteemed Stable Diffusion 1.5/2.1 models, which have consistently led the field of image generation.

At the core of the team’s breakthroughs lie transformative discoveries. A fusion of a latent model, the Variational Autoencoder (VAE), with readily accessible upsamplers, takes center stage. This innovative combination enables training at astonishingly low resolutions like 32×32 while generating high-resolution 1024×1024 images with ease. This innovation significantly reduces training costs without compromising image quality. The team’s adept use of automated rejection sampling, coupled with PickScore evaluation and refinement during inference, represents a strategic move that drives substantial enhancements in results. This meticulous approach consistently produces high-quality images, bolstering the technology’s reliability.

Delving deeper, the team unpacks the intricate layers of their methodology. XGen-Image-1 adopts a latent diffusion model approach, harmonizing both pixel-based and latent-based diffusion models. While pixel-based models directly manipulate individual pixels, latent-based models leverage denoising autoencoded image representations in a compressed spatial domain. The team’s exploration of the balance between training efficiency and resolution culminates integrating pretrained autoencoding and pixel upsampling models.

The role of data is paramount. The LAION-2B dataset, carefully curated based on aesthetic scores of 4.5 or higher, forms the foundation of XGen-Image-1’s training process. This extensive dataset encompasses a range of concepts, fueling the model’s capacity to generate diverse and realistic images. The optimization of training infrastructure using TPU v4s underscores the team’s innovative problem-solving prowess, showcased by their adept management of storage and checkpoint-saving challenges.

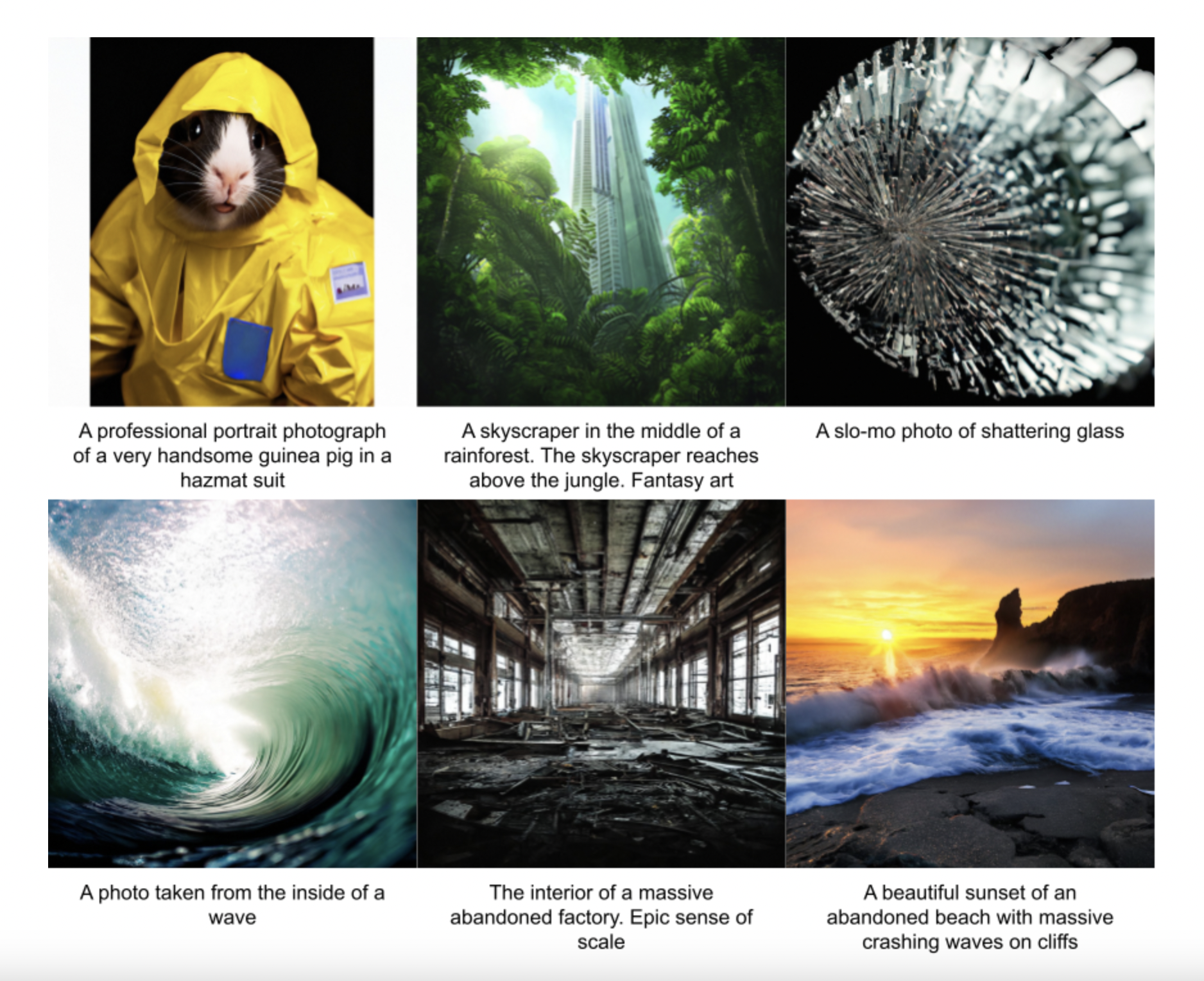

Performance evaluation serves as a litmus test for XGen-Image-1’s capabilities. Comparative analysis against the formidable Stable Diffusion 1.5 and 2.1 models underscores its prowess, with superior metrics like CLIP Score and FID. Notably, the model excels in prompt alignment and photorealism, surpassing Stable Diffusion models in FID scores and demonstrating competitive human-evaluated performance. The human evaluation further solidifies its standing among top-performing models. Integration of rejection sampling emerges as a potent tool for refining image outputs, complemented by strategic techniques like inpainting for enhancing less satisfactory elements.

In essence, the emergence of XGen-Image-1 underscores the Salesforce Research team’s unwavering dedication to innovation. Their seamless fusion of latent models, upsamplers, and automated strategies epitomizes the potential of generative AI in reshaping creative landscapes. As development continues, the team’s insights are poised to shape the trajectory of AI-driven image creation, paving the way for transformative advancements that resonate across industries and audiences alike.

Check out the Reference Article. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 28k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

Madhur Garg is a consulting intern at MarktechPost. He is currently pursuing his B.Tech in Civil and Environmental Engineering from the Indian Institute of Technology (IIT), Patna. He shares a strong passion for Machine Learning and enjoys exploring the latest advancements in technologies and their practical applications. With a keen interest in artificial intelligence and its diverse applications, Madhur is determined to contribute to the field of Data Science and leverage its potential impact in various industries.

Uncover the groundbreaking advancements in AI through our AI Research Newsletter

Salesforce Researchers Introduce XGen-Image-1: A Text-To-Image … – MarkTechPost